When AI Becomes a Burden: Why cURL Killed Its Bug Bounty Program

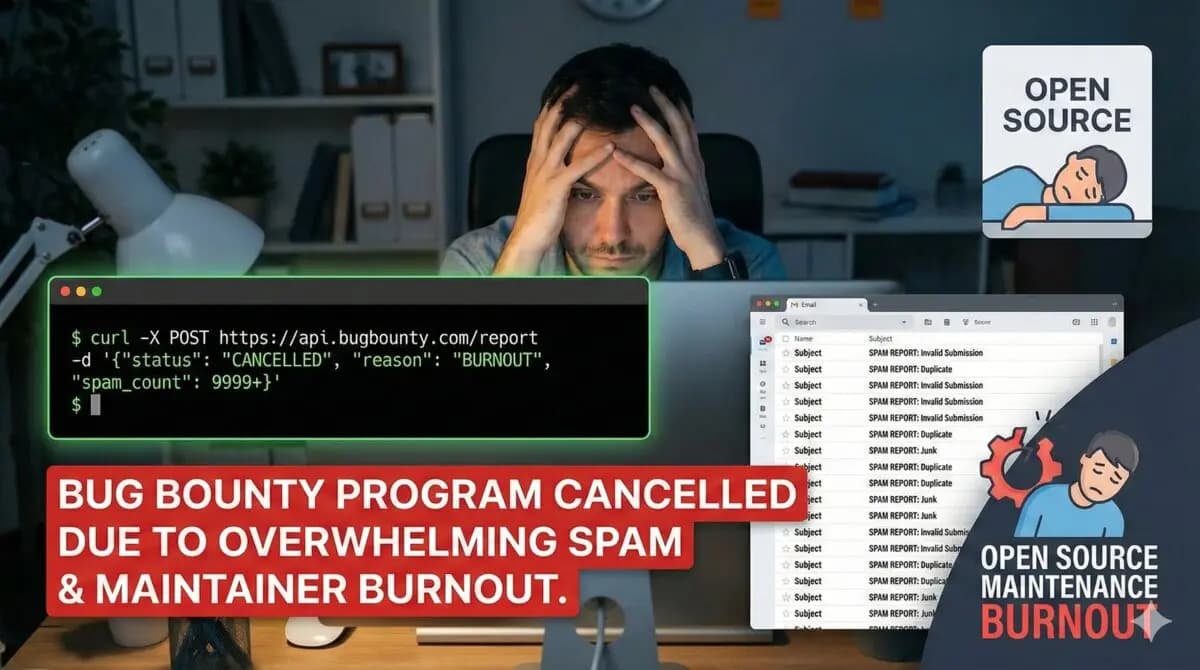

cURL has ended its bug bounty program due to an overwhelming influx of low-quality AI-generated reports. Here is what this means for the future of AI in software development.

We often talk about Generative AI as a productivity booster—a tool that writes code, drafts emails, and even debugs our applications. But recently, we saw the darker side of this efficiency. The maintainers of cURL, the ubiquitous command-line tool used in nearly every software stack worldwide, made a drastic decision: they are shutting down their bug bounty program.

Why? Because of "AI slop."

Daniel Stenberg, the lead maintainer of cURL, announced that the project is discontinuing its reward program for security vulnerabilities. The reason wasn't a lack of funds or a lack of interest in security. It was an unmanageable flood of low-quality, hallucinated bug reports generated by LLMs.

The Problem with "AI Slop" in Bug Bounties

For those unfamiliar, a bug bounty program invites security researchers to find and report vulnerabilities in exchange for money. It is usually a win-win: software gets safer, and researchers get paid.

However, the barrier to entry has traditionally been technical expertise. You needed to understand C, memory management, and network protocols to find a bug in cURL. Generative AI lowered that barrier—but not in a good way. It allowed non-experts to copy-paste code into ChatGPT, ask "Is there a security bug here?", and blindly submit the result.

The result? Maintainers were buried under a mountain of reports that looked plausible but were technically nonsensical. This is what Stenberg refers to as "AI slop." It takes a human expert significant time to read, analyze, and debunk a complex-looking report, often more time than it takes to verify a real bug. When the noise-to-signal ratio becomes too high, the system collapses.

The Quality vs. Quantity Paradox

As a Generative AI developer, I see this as a critical lesson in how we deploy these technologies. LLMs are statistically probable token generators; they are not truth engines. They excel at pattern matching but struggle with deep logical reasoning in specialized domains like low-level memory safety.

When we incentivize quantity (getting paid per bug report) without strict controls on quality, AI becomes a weapon of mass distraction. It allows bad actors or naive users to spam open-source maintainers with zero effort, effectively performing a Denial of Service (DoS) attack on their time.

Lessons for Developers and Companies

This incident serves as a wake-up call for the industry. Here is how we should adapt:

1. Human-in-the-Loop is Non-Negotiable

AI should assist, not replace. If you are using AI to write code or find bugs, you must understand the output. Submitting AI-generated work without review is professional negligence.

2. Proof of Work Mechanisms

Open-source projects and bounty platforms may need to introduce new barriers to entry. This could mean requiring a working exploit script (proof of concept) rather than just a theoretical description, or reputation-based gating for submissions.

3. AI Detection and Policy

cURL tried to mitigate this by requiring disclosure of AI tools, but bad actors rarely follow the rules. We need better ways to fingerprint AI-generated content or community standards that socially penalize low-effort AI spam.

The Future of AI in Development

I am still incredibly bullish on Generative AI. I use it every day to build Shopify apps and optimize workflows. But the cURL saga proves that tools are only as good as the hands using them.

Open source is built on trust and human contribution. If we allow AI to flood these channels with noise, we risk burning out the very maintainers who keep the digital world running. Let's use AI to sharpen our skills, not to fake expertise.

🛠️Generative AI Tools You Might Like

Tags

📬 Get notified about new tools & tutorials

No spam. Unsubscribe anytime.

Comments (0)

Leave a Comment

No comments yet. Be the first to share your thoughts!